Colorful Huegene Experiments

I came up with an idea for generating images.

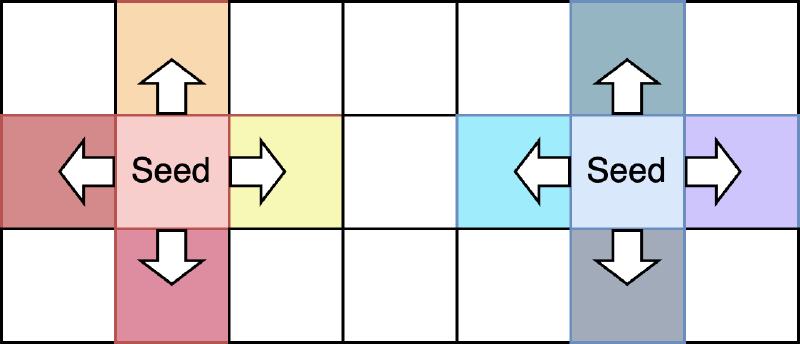

Start by creating an empty image and placing one or more seed pixels with a color of your choice in it. Every empty pixel around these pixels is now part of a front that will be expanded outwards. Until the whole image is filled, pick a pixel in the front and fill it with color. All empty pixels around it get added to the front.

This is known as the flood-fill algorithm (Wikipedia) because you flood the image with paint. There are some helpful visualizations on the Wikipedia page.

Filling an image with a single color is not that interesting, which is where my idea comes in: Every pixel remembers its parent and gets filled with a color that differs slightly from its parent’s color.

This is easy to implement, so here is my first try. I placed a single black pixel in the middle and then filled the whole image.

This looks less dynamic than I had anticipated. But it can be explained: I always pick the first pixel in the front to fill next. This linear strategy results in a linear-looking image. By picking a random pixel in the front, the image looks much more organic.

By reducing the strength of changing the color, you can get a smoother image. For this image, I picked an orange seed and moved it off-center.

Here is an image with multiple seeds.

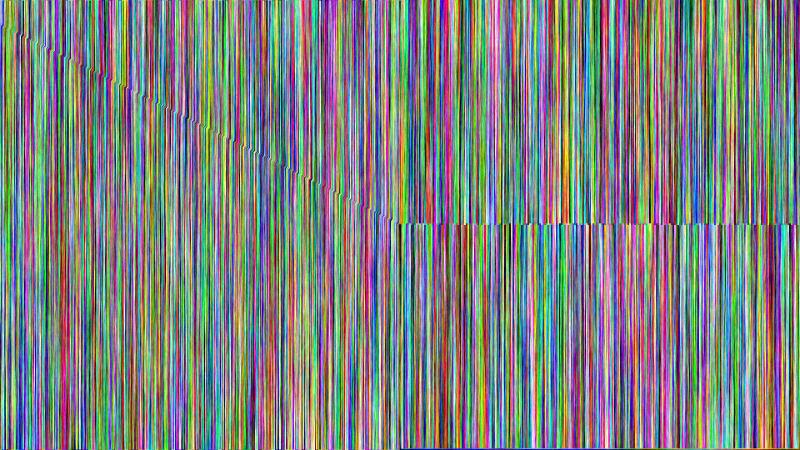

In all previous images, the front was filled with colors that were changed randomly from each pixel’s parent. For the following two images, I made up a new set of rules.

Inside a horizontal strip, the horizon I call it, the color is changed randomly, as before. For everything above the horizon, the color should gradually change more towards a deep blue. For everything below the horizon, the color should quickly change to black.

This set of rules works out pretty well. Combined with a yellow seed on the horizon, this looks like a sunset. The edge of the horizon is colorful, making it look like forests and mountains are catching the last rays of the sun.

Huegene

The idea of modifying a flood-fill is not new. Through a lot of searching the web, I was able to find the first mention of a similar algorithm by Dave Ackley, which is where I got the name Huegene.

From http://robust.cs.unm.edu/doku.php?id=ulam:demos:coevolution (Web Archive):

In 2002, Dave Ackley developed a coevolution simulation project called Huegene for a C++ programming course.

The model described in the article presents a simulation of plants and herbivores. The plants behave like the pixels in the algorithm I have shown above, spreading and changing color. Additionally, there are herbivores, which can move around and eat plants that have a similar color to them. When producing offspring, the child will have a slightly different color from the parent. The goal of the simulation is to show the herbivores and plants adapting to changing conditions in the biome around them. You can see it in action in this video by Ackley, which concludes this post on another colorful note.